AI-powered code editor for GPU kernel development, custom agents, and stable SSH remote workflows

┌─────── Product Hunt ────────┐ │ ▲ #1 Product of the Day │ └─────────────────────────────┘

★★★★★ 4.9/5.0 (100+ reviews)

See kernel metrics while typing across CUDA, Numba, Mojo, and CUDA Tile

Clear model list for cloud LLMs and local GPU-backed models

Run models locally with Ollama, vLLM, or LM Studio. Your code never leaves your machine.

Tells you exactly what's wrong and how to fix it, locally or over SSH

From code to deployment in 4 steps

Write GPU code in your preferred DSL

RightNow supports multiple GPU programming languages and domain-specific languages, including Numba, Mojo, and CUDA Tile.

Native CUDA C/C++

OpenAI Triton

Mojo GPU kernels

PyTorch Framework

CUTLASS Templates

Tile-based CUDA kernels

Numba GPU kernels

Tile Language DSL

Request a new language

What power users actually need

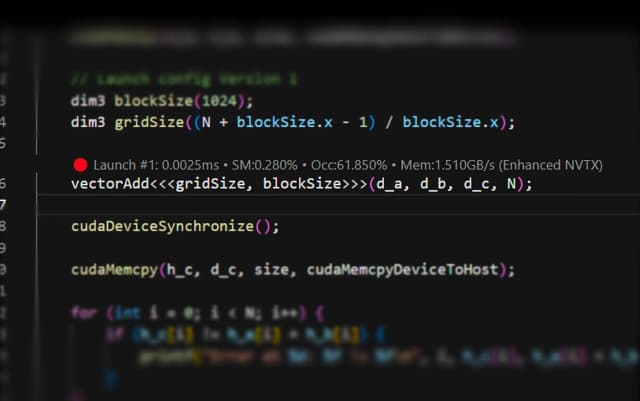

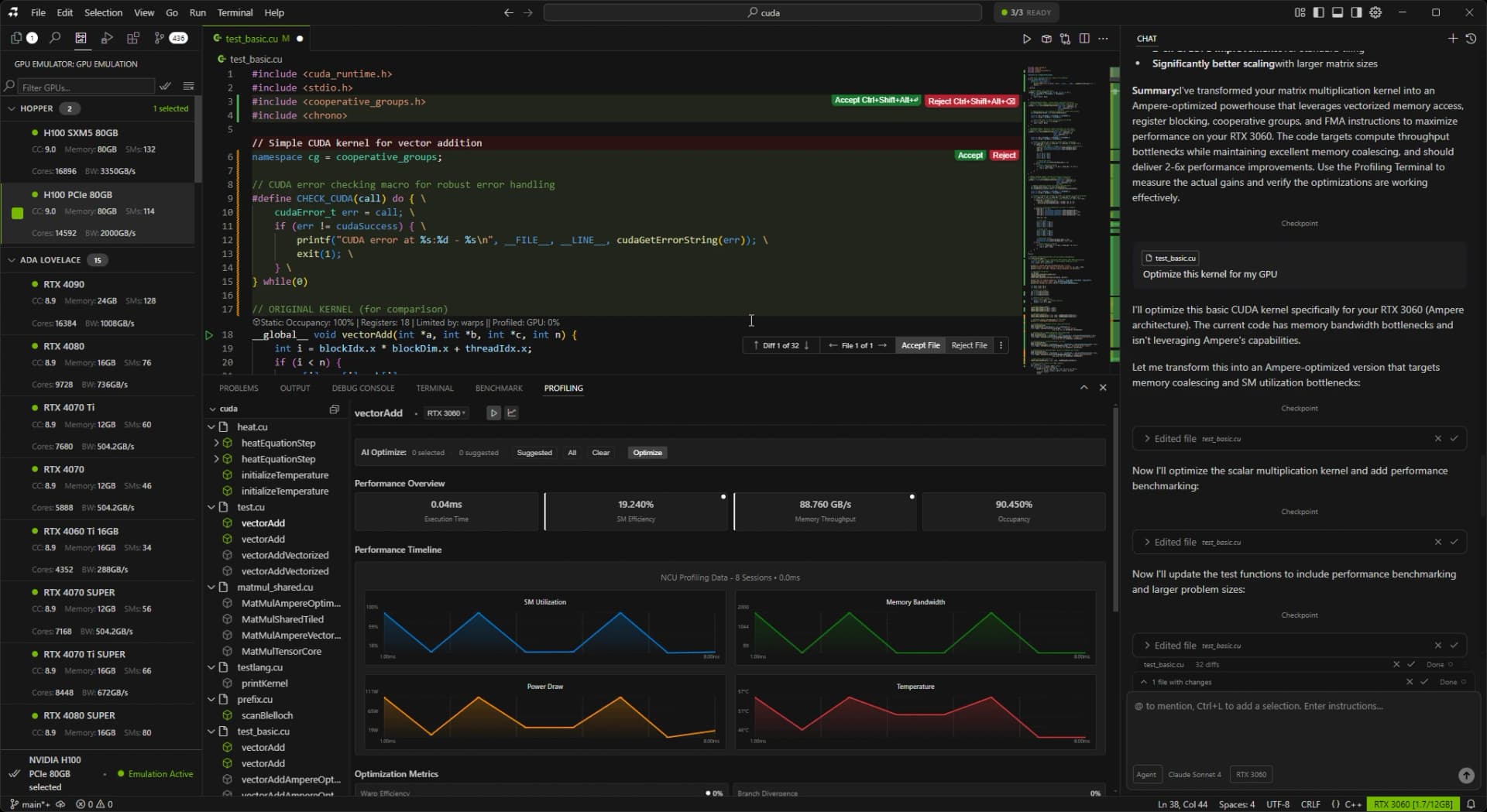

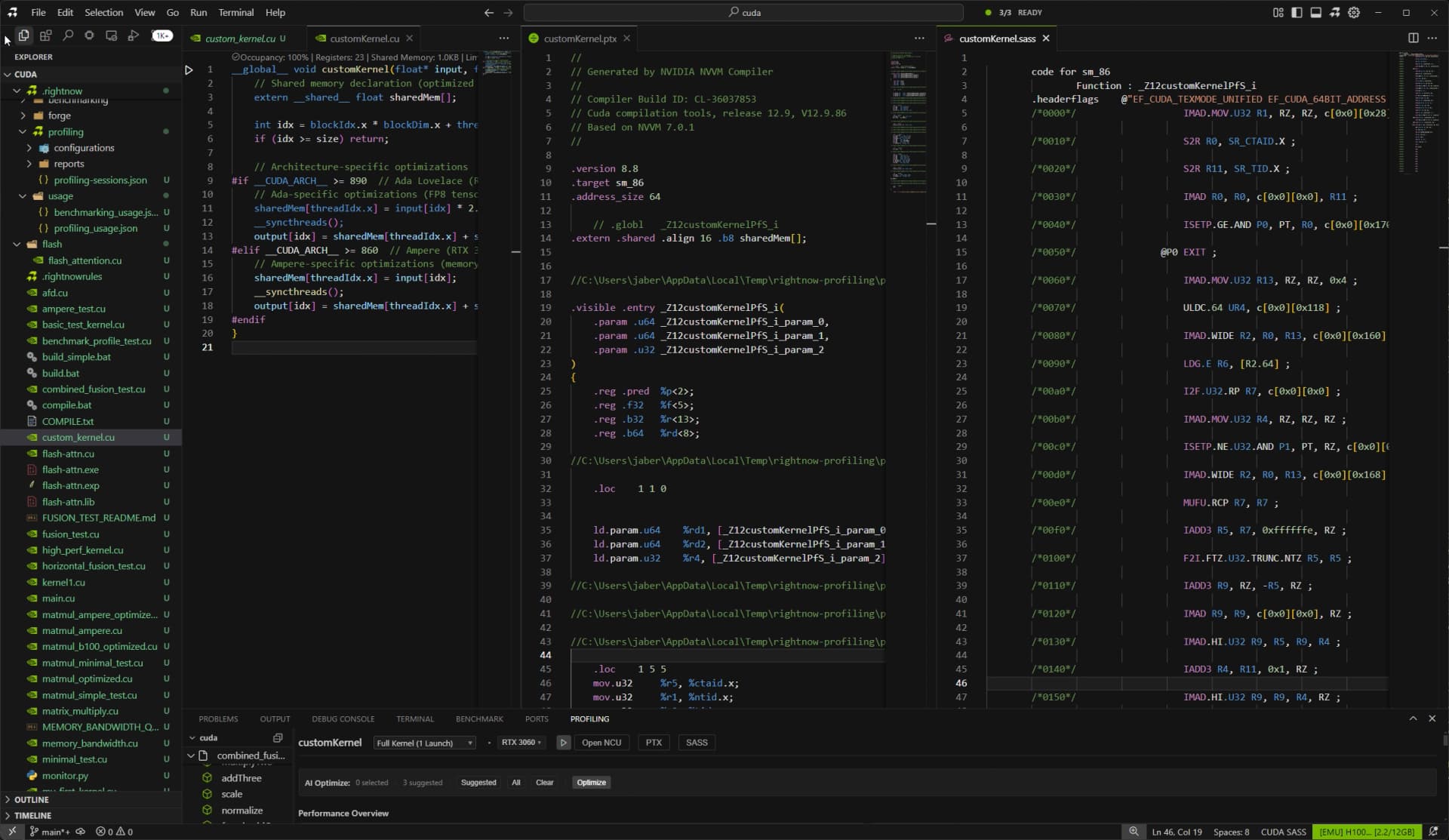

See what your GPU actually executes

Godbolt-style assembly view for GPU kernels. Hover on any line to see the PTX and SASS instructions.

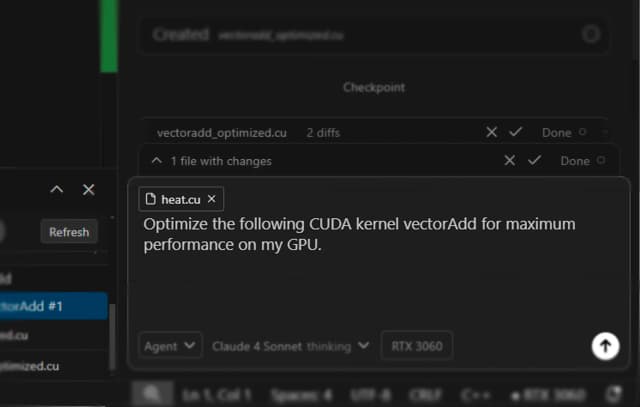

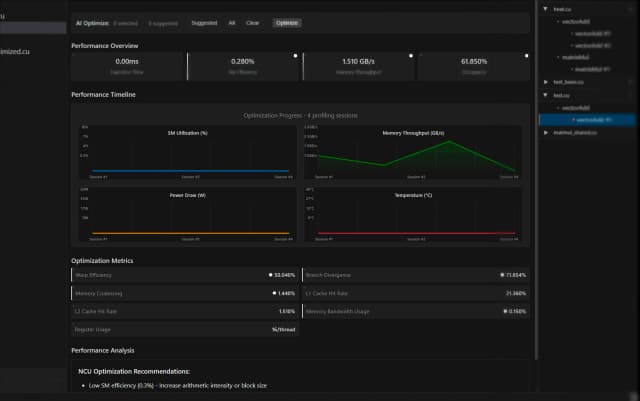

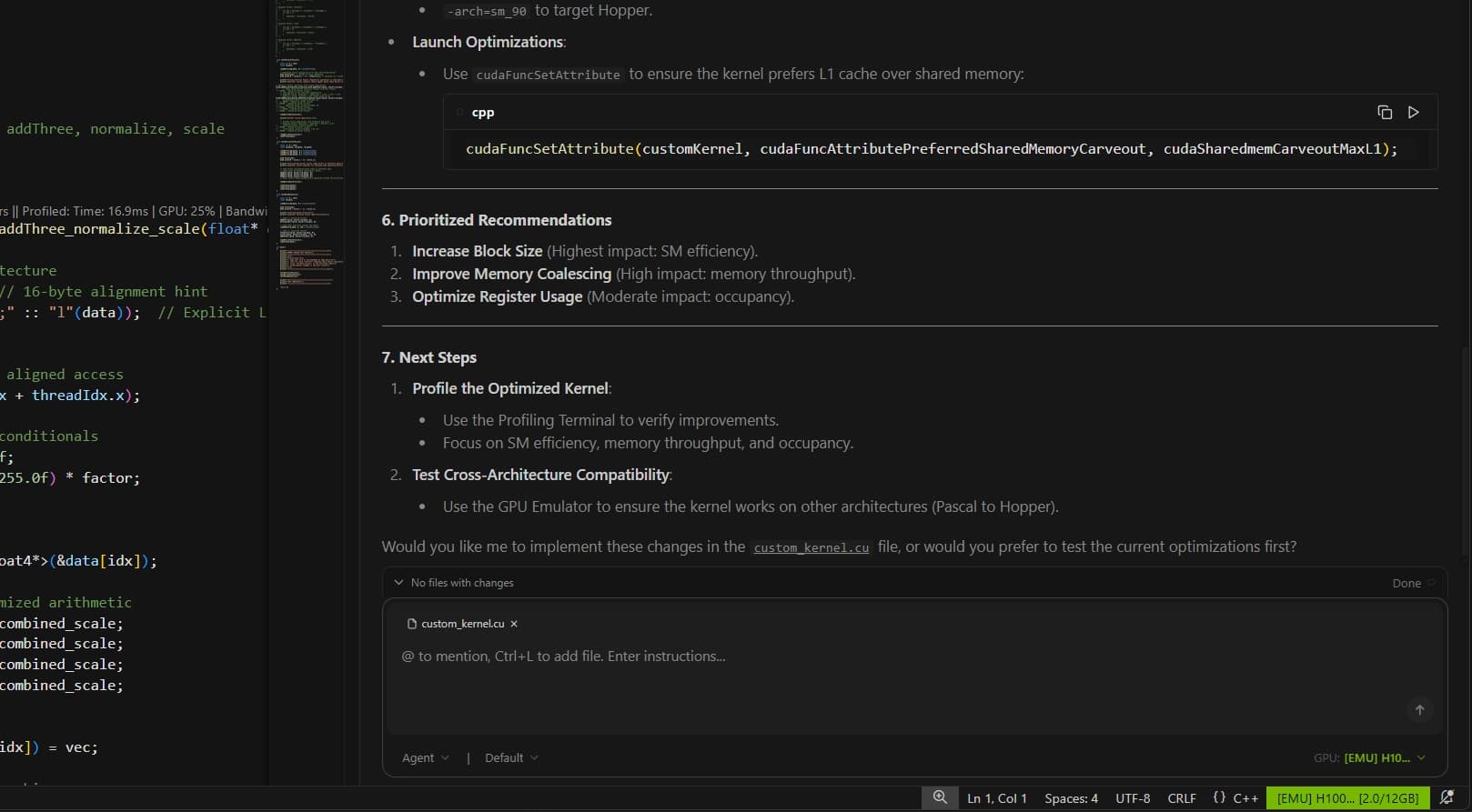

Know exactly what's slowing you down

The AI reads your profiling results and tells you exactly what to fix for your specific GPU.

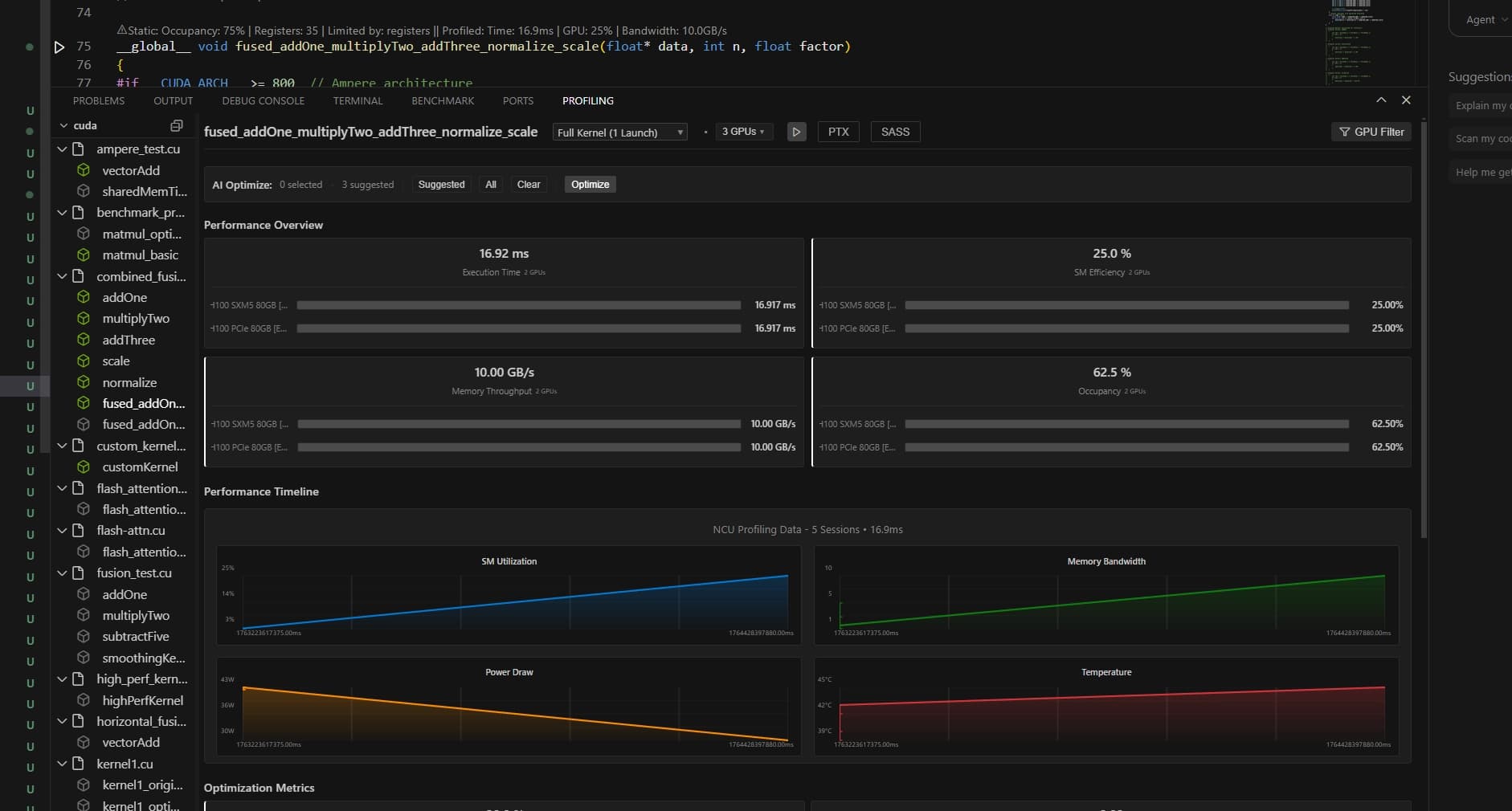

Scale your testing across hardware

Profile across multiple GPUs at once. Compare metrics side by side and catch regressions early.

Code locally, run on cloud GPUs

Write code on your laptop and execute on cloud H100s instantly. No setup required.

Latest updates and improvements

New stable SSH, custom agents with skills and MCP, clear cloud/local LLM list, plus Numba, CUDA Tile, and Mojo support with docs, autocomplete, emulation, profiling, and benchmarking.

CLI Swarm Agent with 32 parallel Coder+Judge pairs. Up to 5x faster than torch.compile() with 97.6% correctness.

Profile, benchmark, and emulate PyTorch kernels directly in the editor. Same workflow as CUDA, Triton, TileLang, and CUTE.

CUDA, Triton, TileLang & CUTE support with intelligent documentation retrieval that understands your GPU and code context.