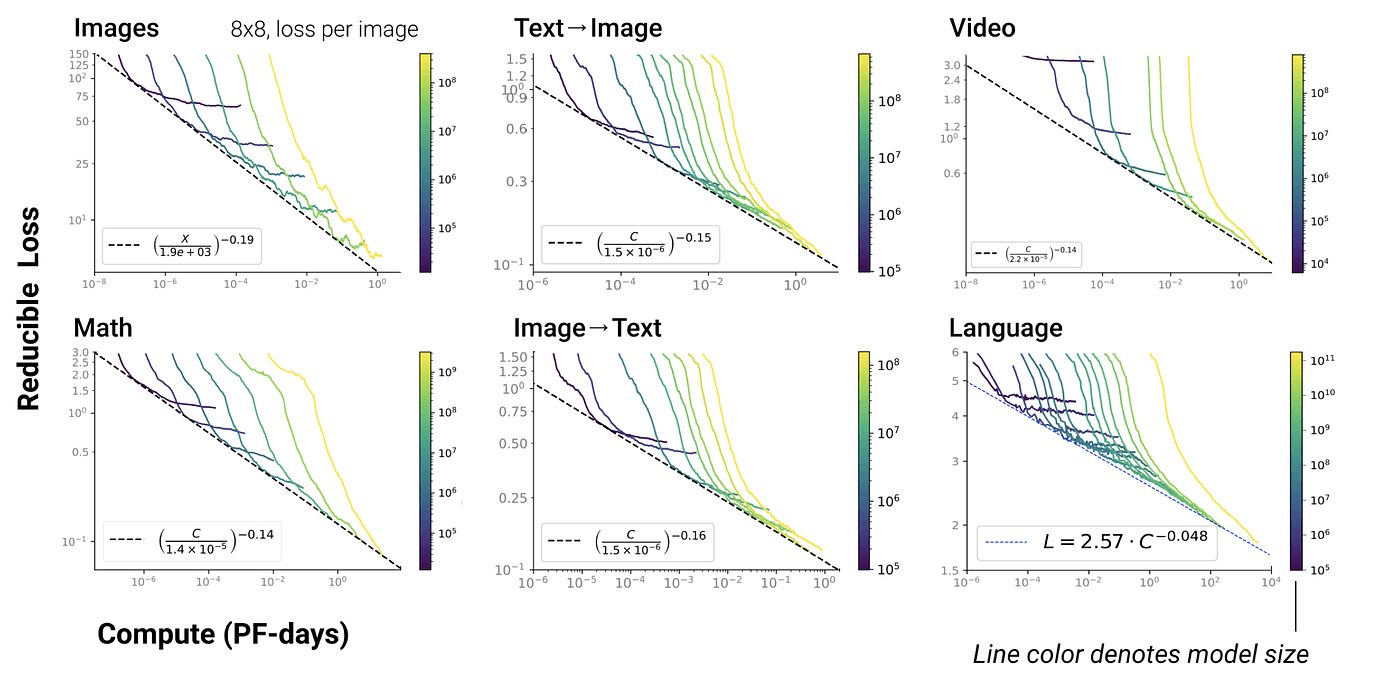

When researchers plot model cross-entropy loss against compute on a log-log scale, the result is a near-straight line: loss falls predictably as compute, model size, or training tokens increase. That empirical regularity - the scaling law - lets teams forecast returns, but it also shows the limit: buying more GPUs gives diminishing marginal returns.

DeepMind's compute-optimal work later showed that, for a fixed compute budget, training more tokens at the right model size can outperform simply increasing parameter count. That is why tokens and data hygiene matter as much as raw model scale.

Those two facts set the problem we care about. The engineering question is not whether the scaling law exists. The question is how to shift the intercept of that log-log line so the same FLOPs buy lower loss. Below I outline the mechanisms that reliably move the intercept, give a one-week playbook you can run on any stack, and explain what we're building at RightNow AI.

1) Make every token more informative - data hygiene and targeting. Remove duplicates and low-value text. Score and weight high-signal examples. Generate small, targeted synthetic datasets aimed at real failure modes rather than dumping random synthetic text into training. These steps increase sample efficiency and raise the effective value of each training step.

2) Raise effective capacity without linear FLOPs - algorithmic tricks. Conditional compute (sparsity, MoE) activates only the parameters you need per token. Low-rank adapters (LoRA) let you fine-tune capability with far fewer trainable parameters. Practical quantization (e.g., 4-bit workflows) reduces memory and bandwidth costs while preserving accuracy. These techniques change the constants in the scaling law: the slope stays, the intercept drops.

3) Squeeze the hardware - systems engineering that converts paid cycles into useful progress. Profile real runs and fix the hot paths. Replace IO-heavy attention with IO-aware kernels (FlashAttention), fuse kernels to eliminate extra copies, optimize memory layouts, and tune your mix of pipeline/tensor/data parallelism. Memory sharding (ZeRO) reduces per-GPU memory pressure and communication stalls. These fixes turn idle or blocked cycles into FLOPs that actually reduce loss.

Stack those three groups and you lower loss for the same FLOP budget - effectively shifting the whole line downward on the log-log plot.

Loss (log)

|

|\

| \\

| \\\ original scaling (Kaplan-style power law)

| \\\

| \\\ ← after systems optim (FlashAttention, ZeRO)

| \\\

| \\\ ← after algorithmic optim (MoE, LoRA, quant)

| \\\ ← after data optim (dedupe, targeted synth)

+------------------------------------ Compute (log)

C0 C1 C2 C3Interpretation: The slope (the scaling exponent) remains. Data, algorithm, and system interventions lower the intercept - same compute, lower loss.

Typical waste breakdown (illustrative) +-----------------------------------+ | Duplicates / low-value tokens : 30% | | Kernel inefficiencies : 25% | | Communication / imbalance : 20% | | Checkpoint / IO overhead : 15% | | Suboptimal hyperconfig : 10% | +-----------------------------------+

Recovering even a portion of these losses can produce the effective output of a much larger cluster.

Always convert improvements into dollars or experiment counts: seconds saved → GPU-hours saved → experiments gained per month.

If you want the technical appendix (Kaplan formula, worked compute→loss examples, and an anonymized profiler trace with exact fixes), it's ready to publish as a linked appendix or gated notebook.

We are building an integrated stack that combines continuous kernel-level profiling, actionable optimization suggestions, and data-centric tooling for targeted synthetic examples. In internal tests, combining kernel and data fixes produced single-digit to low-double-digit reductions in cost-per-loss-point and shortened iteration cycles enough to run materially more experiments for the same budget.

We will publish a reproducible notebook and an anonymized trace so you can verify the before/after wall-clock and loss curves.

If you are a systems engineer who wants fewer blind guesses, a researcher who needs faster iteration, or a model owner who wants to reduce training cost without losing capability, download RightNow and start optimizing your kernels today.

Jaber, RightNow AI